Data Engineering: your strongest compagnon

Data chaos? We can help you.

Improved data quality

Information at your fingertips

Scalability

Better security

Get a transparent view of your data

We understand that data sometimes feels like a tangle, but that’s what we’re here for. With a backpack full of experience, our unique Data Platform Framework and powerful tools such as Azure Synapse, Databricks and Snowflake, we have helped many companies tame their data.

Our strength? We combine efficient, tailor-made solutions with a good dose of positivity. We focus on what is possible and ensure that you see results quickly.

Ready for a fresh look at your data with the best tools in the industry?

What does a data engineer do?

A data engineer is the architect behind your digital data. He transforms raw data into usable information, lays the foundation for data-driven decisions and optimizes data flows. Moreover, we go beyond just the technique. Our data engineers dive deep into your business challenges, streamline processes and create solutions that will really have an impact.

Working with a Cloubis data engineer means that you not only get advanced data infrastructures, but also a partner who thinks along with you to get the most out of that data.

Our approach

Integration

We start by actively merging all your data. Whether you work with structured databases, unstructured files, external APIs or streaming data, we integrate everything seamlessly. This gives you immediate uniform access to all crucial data.

Storage

We store your data safely and efficiently. Our scalable data platform can handle large data volumes while ensuring security, privacy and compliance. Whether you choose data lakes, lake houses or data warehouses, we find the perfect storage solution for you.Analysis

With everything in place, we dive into the analysis. We know you want to gain deep insights from your data, whether it’s simple overviews or complex machine learning models. Our platform makes it possible, and our data engineers ensure that you can effortlessly and directly generate insights with your favorite tools.Data Engineering Tools

Experience a new class of data analysis.

Process your data through an integrated open analysis platform.

Take your big data analysis and machine learning to the next level. Databricks is not just a platform; it is the place where you turn data into valuable insights. Thanks to the power of Apache Spark, you process data in the blink of an eye, and with intuitive dashboards you share your findings in no time.

The Snowflake Data Cloud

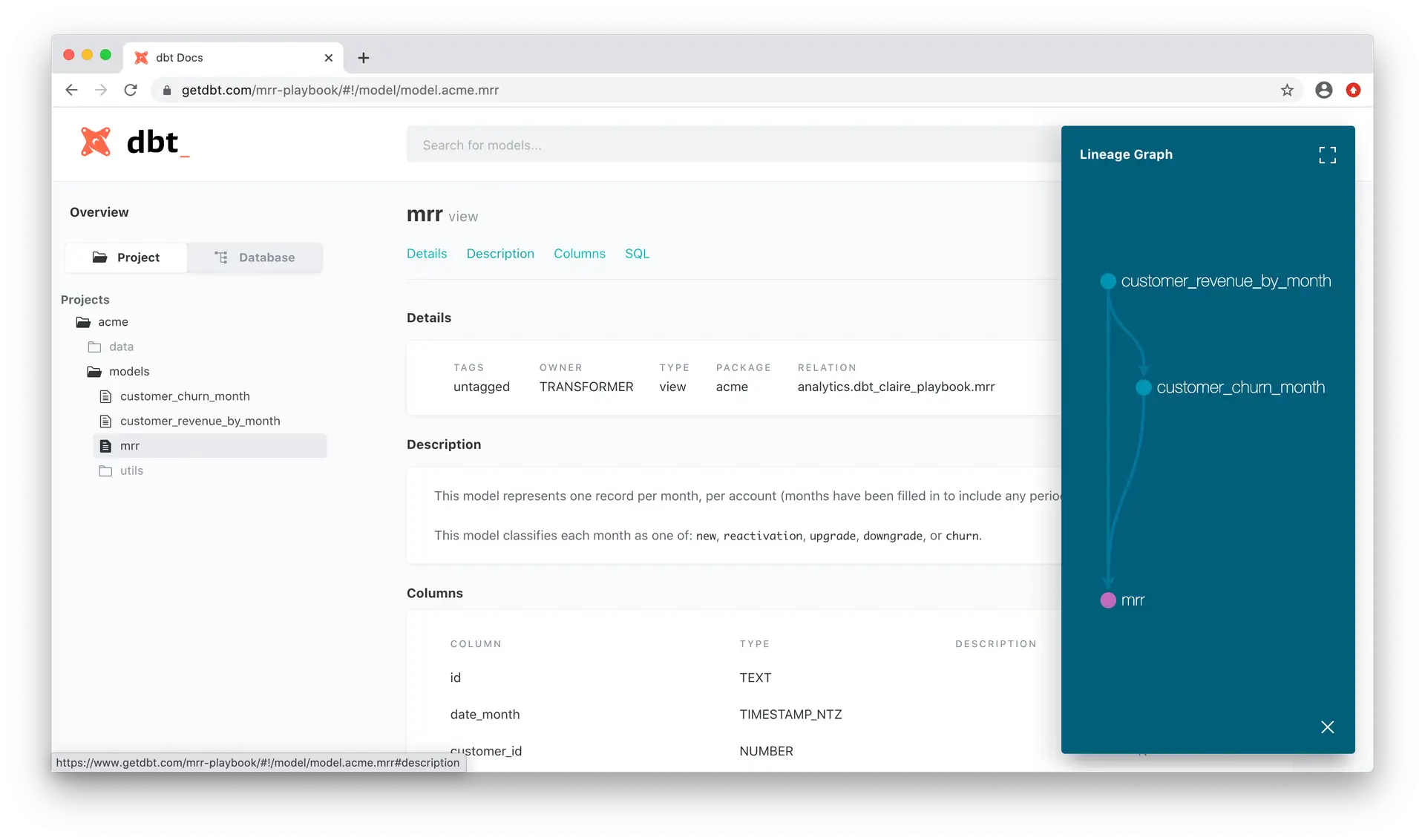

Transform data in your warehouse

Related articles that might interest you

Your data engineering compagnon

With Cloubis, you bring a powerful partner into your home. Our ‘Team as a Service’ (TaaS)’ model puts a team of data specialists at your disposal. Data is the fuel for innovation, but it is the people and their processes that make the difference. That is why we focus on creating a culture in which data plays the leading role.

We support your team with the necessary knowledge and skills to see data as a strategic asset. At Cloubis, data is more than numbers and graphs; it is the key to growth and progress. We combine advanced technologies with practical insights to give your business a head start.

Successful Cloubis collaborations

Let's create a data culture together

At Cloubis, it’s all about your success. Together, we build a strong foundation for your data-driven decision-making, equipped with the right tools and a data model that is perfectly tailored to your business needs.

Extensive expertise:

from strategy to governance

Need more Information?

Ready to discover the power of data analytics? We’re happy to answer all your questions and explain how we can transform your organization. During a live demo, we’ll show you how we can tailor our services to meet your specific needs.

Book a demo

When you fill out this form, one of our Data Engineers will contact you for a demo.

Frequently Asked Questions

Still have questions about how Cloubis

can help your business with data?